LLM-powered assistant with electrotactile feedback to assist blind and low vision people with maps and routes preview

Abstract

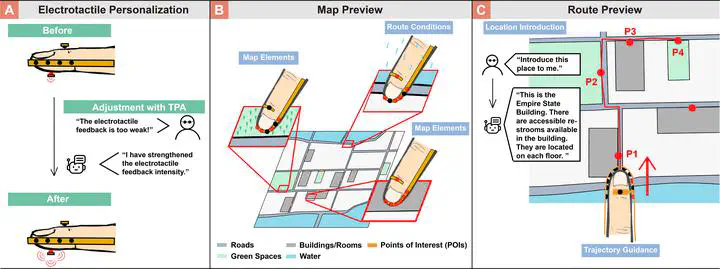

Previewing routes to unfamiliar destinations is a crucial task for many blind and low vision (BLV) individuals to ensure safety and confidence before their journey. While prior work has primarily supported navigation during travel, less research has focused on how best to assist BLV people in previewing routes on a map. We designed a novel electrotactile system around the fingertip and the Trip Preview Assistant (TPA) to convey map elements, route conditions, and trajectories. TPA harnesses large language models (LLMs) to dynamically control and personalize electrotactile feedback, enhancing the interpretability of complex spatial map data for BLV users. In a user study with twelve BLV participants, our system demonstrated improvements in efficiency and user experience for previewing maps and routes. This work contributes to advancing the accessibility of visual map information for BLV users when previewing trips.