Abstract

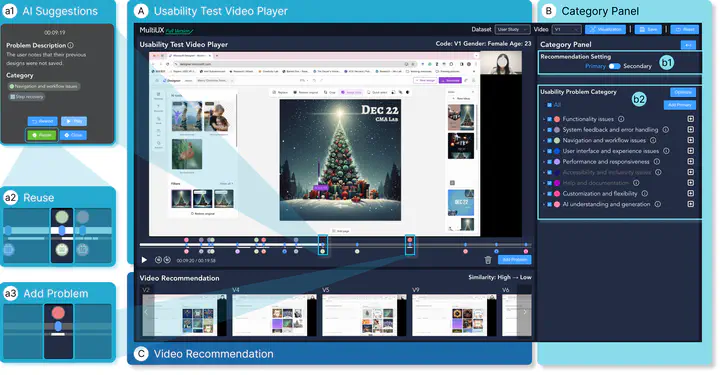

Analyzing multiple usability videos is essential for identifying common problems and prioritizing them. While existing tools assist in identifying problems within single videos, the manual process of comparing and categorizing them remains time-consuming. Inspired by prior work that suggested a human-AI collaborative approach could improve the efficiency and completeness of analyzing single videos compared to either humans or AI alone, we extended this approach to investigate its effectiveness in analyzing multiple videos. We designed MultiUX to assist in strategically analyzing multiple videos by comparing and categorizing problems across them, and optimizing the analysis sequence through video recommendations. MultiUX was compared to a baseline in a between-subjects study involving 20 UX evaluators. Results showed that MultiUX supported analyzing more videos and identifying more common problems that were encountered by more users in a given time. Additionally, MultiUX guided participants to analyze multiple videos more strategically.