Abstract

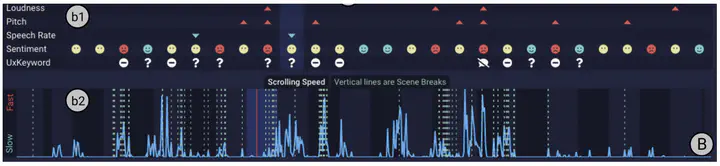

AI has been increasingly adopted in user experience (UX) analysis, in which UX evaluators review test recordings to identify usability problems. However, most AI-infused systems apply fully automatic approaches, leading to distrust from UX evaluators. In my dissertation work, we consider AI as assisting, not replacing human judgment. Through an international survey, we investigated the current practices and challenges of UX evaluators and identified an opportunity for AI assistance. We then studied nuanced cooperative work between UX evaluators and AI, by employing either non-interactive visualizations or interactive conversational assistants (CAs). The next steps include building upon our findings about the reactive Q&A dynamic with CAs, by exploring how a proactive approach or a combination of visualizations and CAs may better support UX evaluators. This research will identify interactions and representations that give rise to productive and trusting collaborations with AI.